Today we are going to look at how to create a truly private AKS Cluster with no public IPs or end-points.

If you have previously built AKS clusters you would have noticed that during provisioning the AKS Cluster provisions a Public IP and a Public Load balancer. This Public IP and used for two reason.

1) Allow connection to the AKS Control Plane

2) Default route NAT for the AKS Node Pools to talk to the Control plane.

If you work for an organisation with higher security requirements and trying to build AKS Clusters to service internal applications there can be some pushback from Security Teams as having a Public IP connecting to a VNET that is private is a big no-no. As such some organisations have Azure Policies in place preventing the creation of Public IP addresses without an exception.

The biggest risk with this is that 1) as a developer if you did not write your yaml files properly you could accidently make your internal application public facing or, 2) The AKS Control plane is accessible over the internet.

To resolve these security issues, Microsoft introduced 3 features to their AKS Clusters:

1) Internal only Load Balancer

2) Using User Defined routes for the Node Pools

3) Private endpoint for the AKS Control plane.

We are going to day see how we are going to use all these features to build a truly private AKS Cluster and deploy a private application.

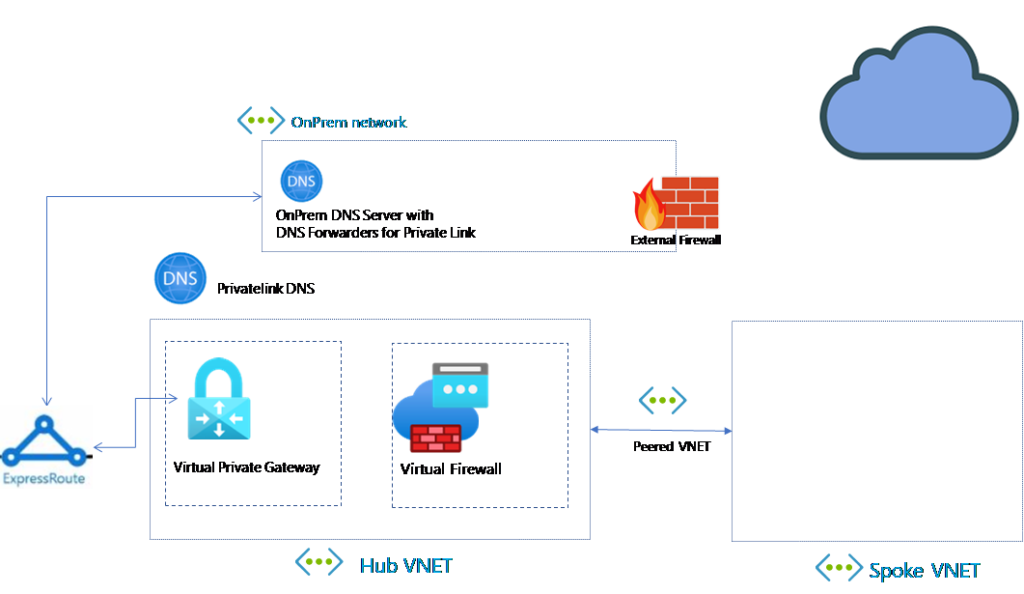

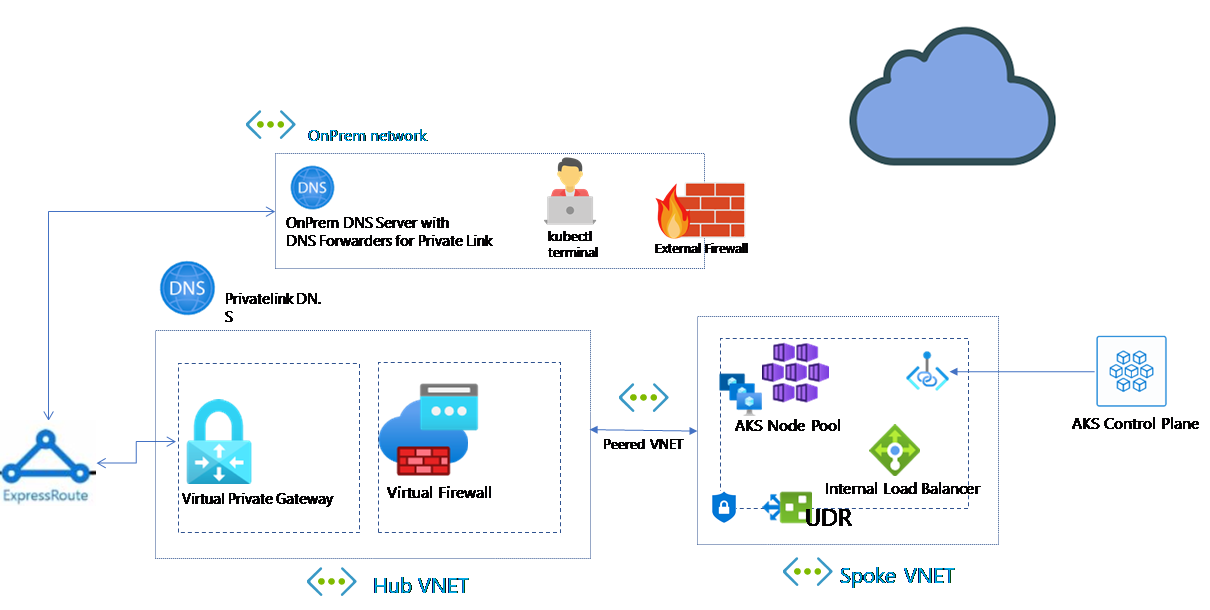

There are a few things you need to have setup as pre-requisites for this to work.

1) Virtual Appliance or VPG or Azure Firewall, to be your default route out to the internet, in my case my LAB has an express route connecting to our OnPrem environment

2) DNS Server setup with PrivateLink Forwarding, for more information check out the link here.

3) A basic understanding on how your networking is setup.

In my environment I already have this stuff setup, and my canvas looks something like this.

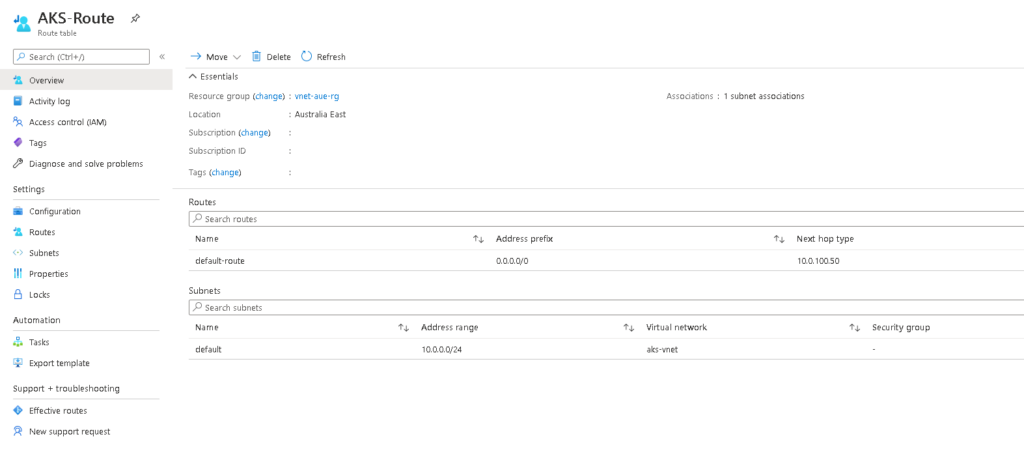

To make sure this works we will first create a User Define Route (UDR) for our Spoke VNET and Subnet and attach it, the UDR requires to have a default route of 0.0.0.0/0 to either a virtual Gateway, or Virtual Appliance. And associate it to your subnet.

Now we are going to create our AKS cluster, for this we are going to use az cli

>$Subnet="/subscriptions/***/resourceGroups/vnet-rg/providers/Microsoft.Network/virtualNetworks/auedevvnet01/subnets/default

> az aks create --resource-group montytest-aue-rg --name monty-private --outbound-type userDefinedRouting --network-plugin azure --vnet-subnet-id $Subnet --service-principal *** --client-secret *** --node-count 1 --enable-private-cluster

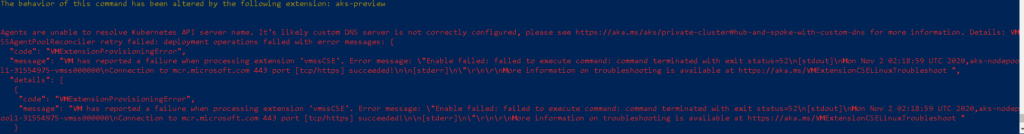

NOTE: First time around when I tried to run this it failed for me with the below:

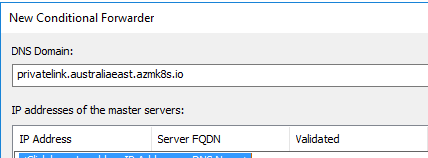

I learnt that even thought I had PrivteLink setup I had to add a conditional forwarder for *.privatelink.australiaeast.azmk8s.io to my Private Link DNS Server

Trying this again. And built 🙂 .

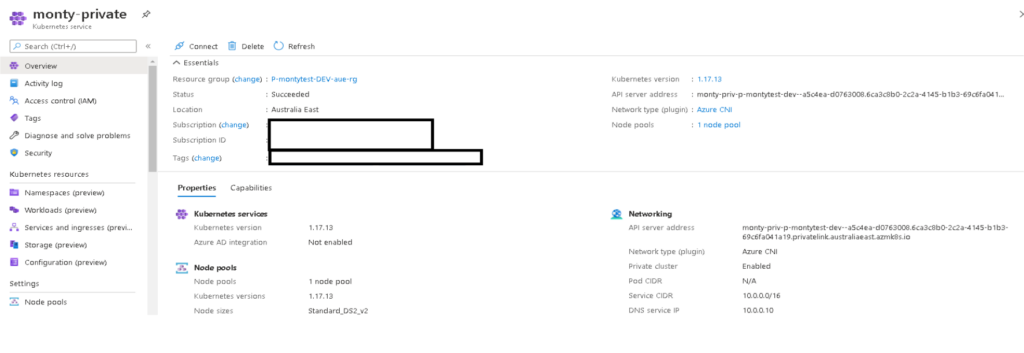

You can see the API Server is now a privatelink URL and trying to ping it it resolves to an internal address.

C:\WINDOWS\system32>nslookup monty-priv-p-montytest-dev--a5c4ea-d0763008.6ca3c8b0-2c2a-4145-b1b3-69c6fa041a19.privatelink.australiaeast.azmk8s.io

Server: localhost.domain

Address: 10.0.100.12

Non-authoritative answer:

Name: monty-priv-p-montytest-dev--a5c4ea-d0763008.6ca3c8b0-2c2a-4145-b1b3-69c6fa041a19.privatelink.australiaeast.azmk8s.io

Address: 10.200.120.10

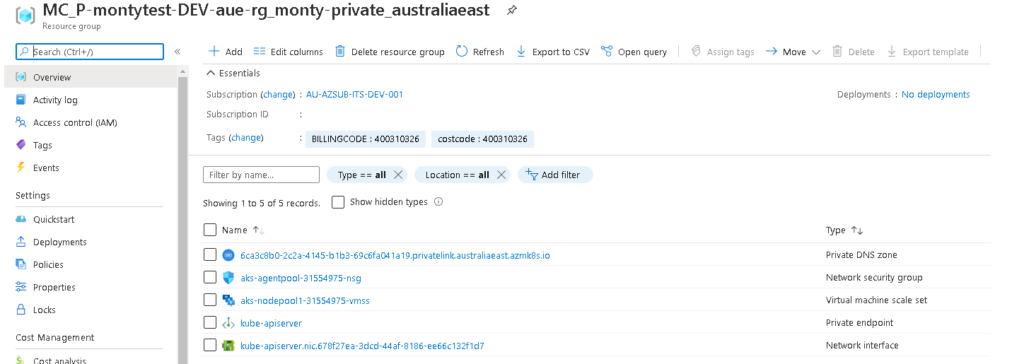

And looking under the covers at the resources that get created in the MC

We can see that there is no default Public IP address and a Private endpoint for the api server.

The two main syntax’s that we added to the command above was –-outbound-type userDefinedRouting. This command basicly removes the requirement for having a public IP to be provisioned as it tells the worker nodes to use the UserDefined Route to get to the Management API. The second argument --enable-private-cluster creates the private endpoint for the API Server and setups up the Privatelink DNS.

Connecting to this cluster is all the same just needs to be on the inside network.

$ az login

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code QZUMV5ZFN to authenticate.

[

{

"cloudName": "AzureCloud",

"homeTenantId": "***",

"id": "**",

"isDefault": true,

...

}

]

$ az aks get-credentials -n monty-private -g P-montytest-DEV-aue-resourceGroups

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-31554975-vmss000000 Ready agent 39m v1.17.13

Creating a new Pod and is all the same just the loadbalancer you need to add an annotation to state it is internal.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

name: nginx

type: webserver

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

resources:

requests:

cpu: 250m

memory: 64Mi

limits:

cpu: 500m

memory: 256Mi

---

apiVersion: v1

kind: Service

metadata:

name: internal-app

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: nginx

$ kubectl apply -f test-internal-aks.yaml

deployment.apps/nginx created

service/internal-app created

$ kubectl get svc --watch

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

internal-app LoadBalancer 10.0.73.238 <pending> 80:30085/TCP 19s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 80m

internal-app LoadBalancer 10.0.73.238 10.200.228.228 80:30085/TCP 87s

Adding this line to the load balancer will create an internal LB.

kind: Service

metadata:

name: internal-app

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

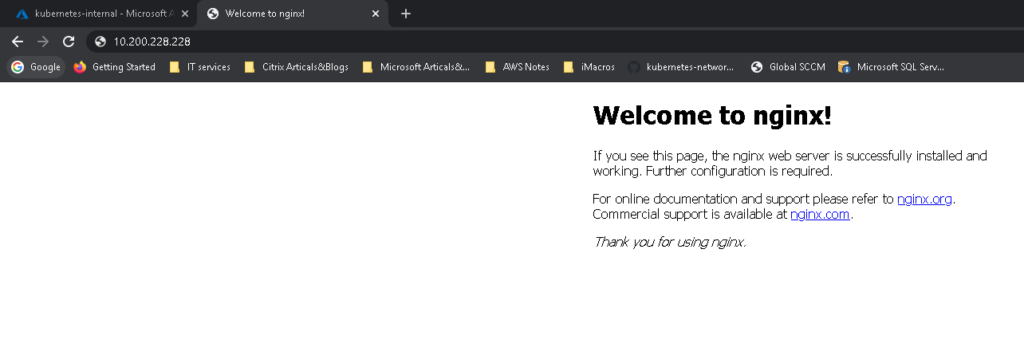

And testing it out and there you go

And we are done a fully private AKS Cluster 🙂

If you have any questions, please feel free to comment below.

Hi this is a great post thanks

Just wanted to check if we can change the load balancer from public ip to private for a running AKS Cluster instead of creating a new one with private load balance ip,which is a straight forward process?

What will be user defined route in that perspective where we have private cidr space using azure express route and aks public load balancer isn’t accessible?

Thanks

Thanks for your message, I have not tested this but you can try running “az aks update –name –resource-group outbound-type userDefinedRouting”. As for your User defined route you will still need to setup a default 0.0.0.0/0 route.